Project Information

- Partner: CSIR with Praekelt

- Students: Joseph Sefara (UL), Thulani Khumalo(UCT)

- Project Lead: Dr. Vukosi Marivate

- Project Mentors: Abiodun Modupe

- Year: 2017/2018

Project Description

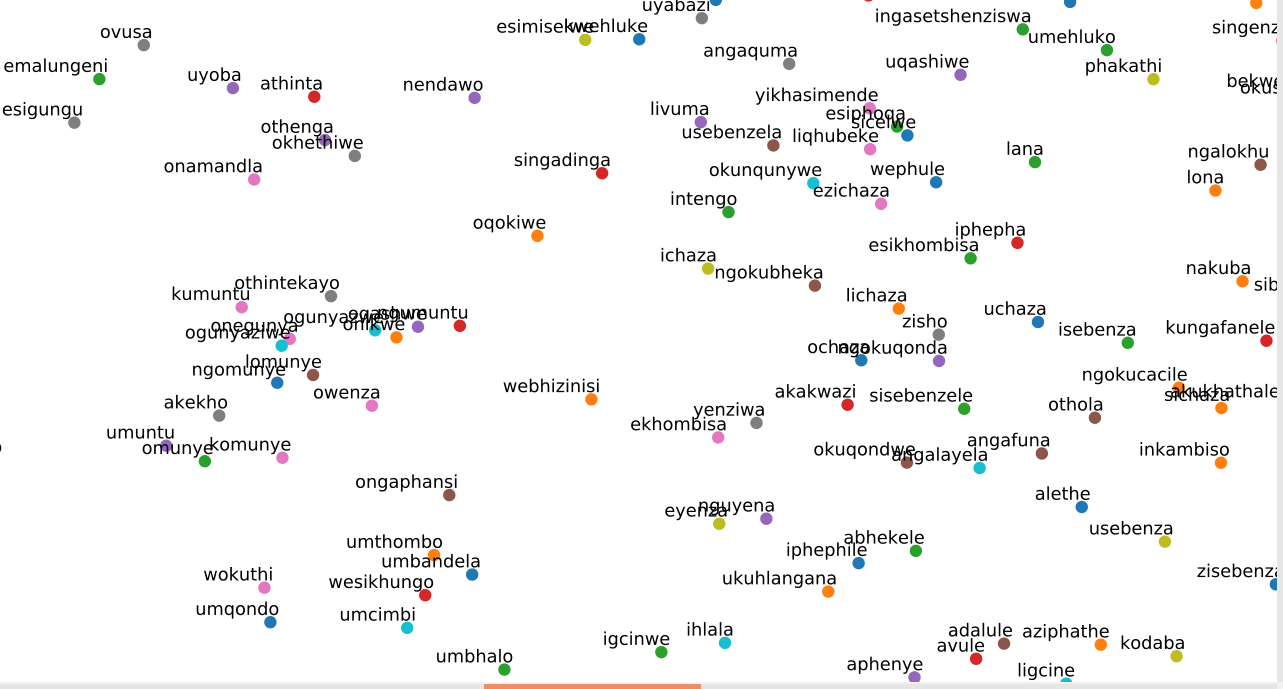

Statistical machine translation (SMT) was a promising paradigm for extracting knowledge from parallel pairs of sentences. Most of the methods achieved competitive accurracies by performing empirical evaluations using training data for language pairs such as English-French but learn very poorly from low -resourced languages. This behaviour makes SMT a poor options for low-resourced languages where parallel data such as Sepedi and isiZulu languages in South Africa are scarce. Pre-trained word embeddings like fastText, Word2vec, and GloVec improved the lexical features over word by placing each word in a lower-dimensional space, using distributional information obtained from unlabeled data. However, the effectiveness of word embedding for down streamed NLP tasks such as low-resourced language is limited in vocabulary and quality of semantic similarity between pairs of words in a corpus are not well represented. The aim of the study is to measure and improve the quality of both the word embedding and semantic similarity training and testing datasets for our local languages. We developed and evaluated the system on data from the internet and secondary data from language resource management agency.